Panagiotis Antoniadis

PhD Fellow in the Department of Biology at the University of Copenhagen

Section for Computational and RNA Biology

Centre for Basic Machine Learning Research in Life Science

Pioneer Centre for Artificial Intelligence

I am an ELLIS PhD student in Machine Learning at the University of Copenhagen, supervised by Ole Winther and Simon Olsson. My research focuses on generative models for accelerating molecular dynamics simulations. I am also interested in large-scale training of DNA language models.

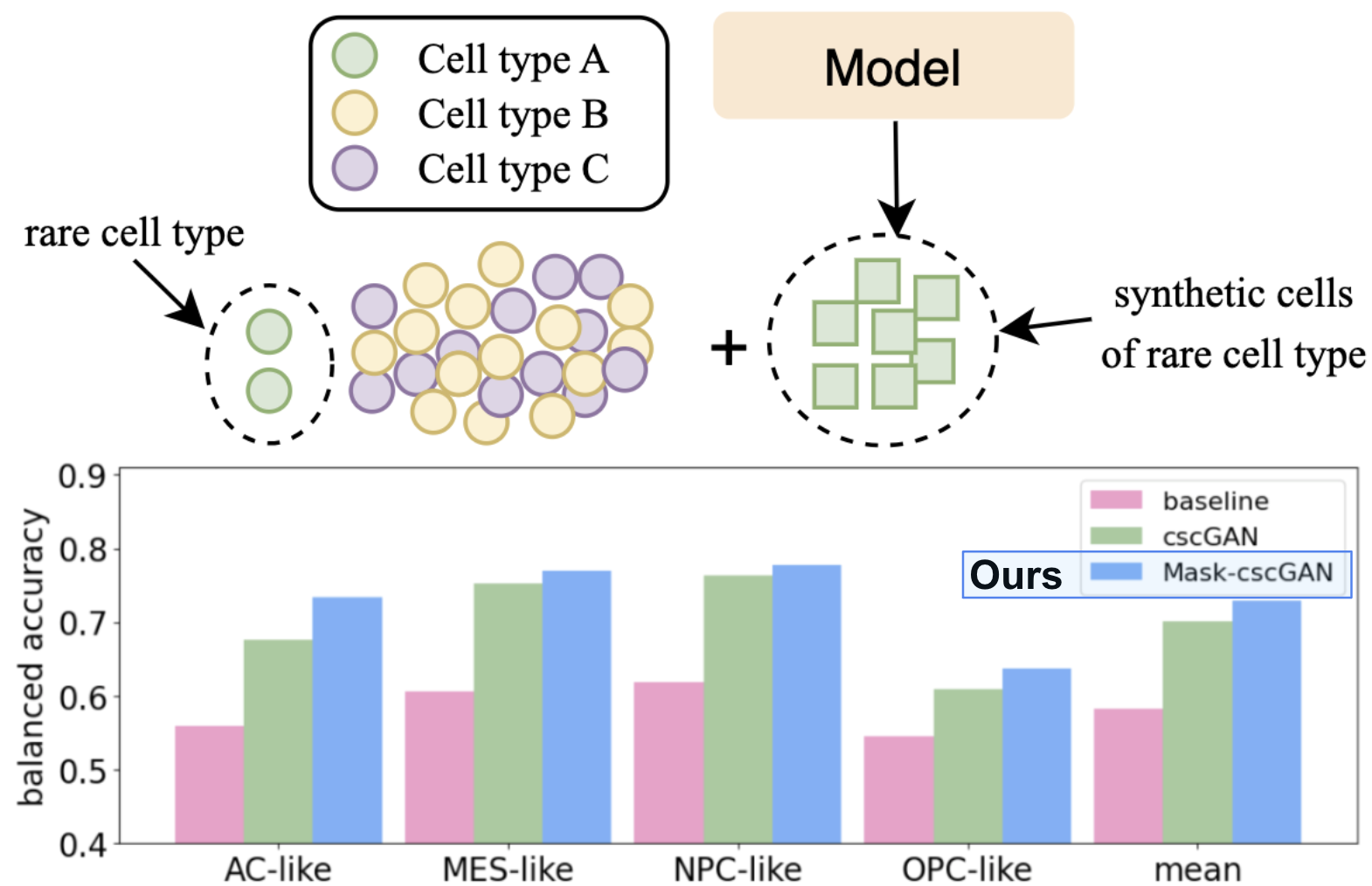

Previously, I worked at DeepLab, where I developed generative models for transcriptomics and deep neural networks for EEG-based brain-computer interfaces.

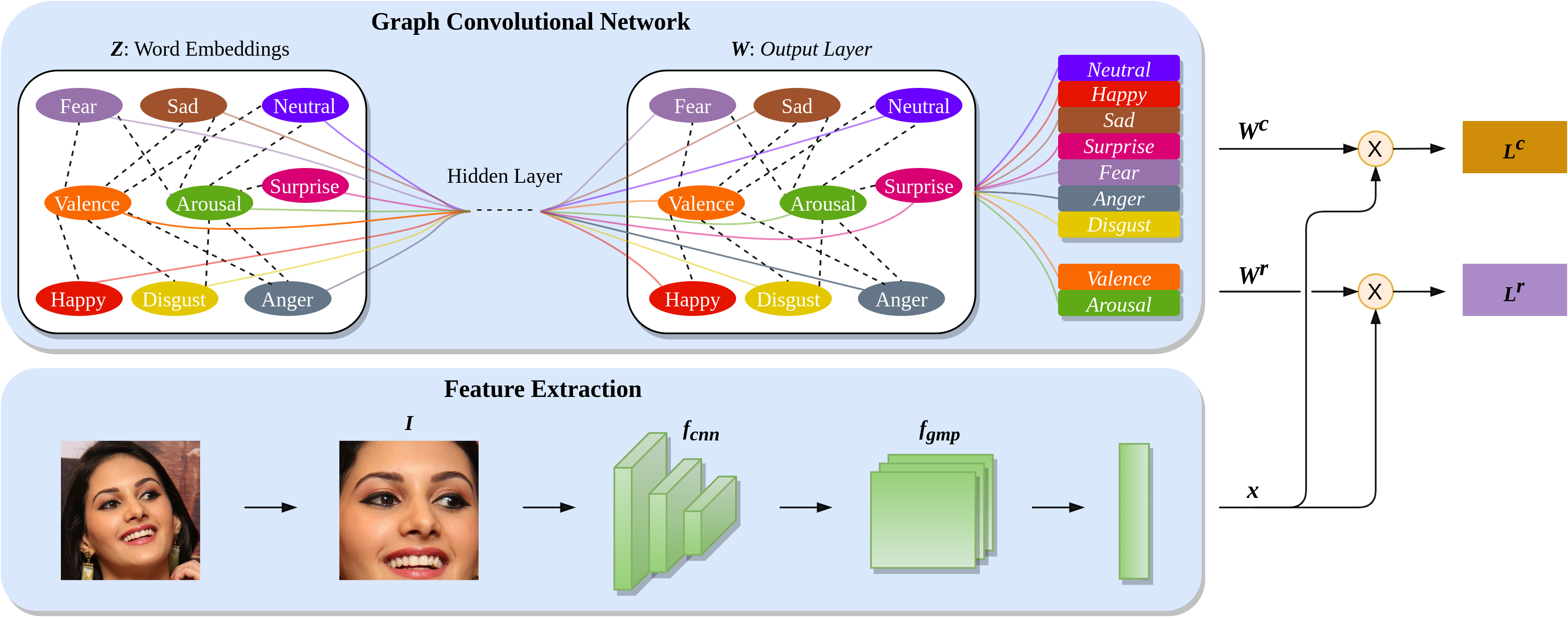

I hold a BSc and MSc degree in Electrical and Computer Engineering from NTUA, where I completed my thesis on visual emotion recognition under the supervision of Petros Maragos.

Note: If you are planning to apply for the ELLIS PhD program, feel free to send me questions about the program.

news

| Nov 17, 2025 | TA in the “Deep Learning” course of UCPH |

|---|---|

| Nov 03, 2025 | I am presenting our poster on Multimodal Genomic Foundational Models at the AI in Science Summit 2025 in Copenhagen. |

| Sep 01, 2025 | I am presenting our poster “Can Pretrained Models be useful preconditioners for Molecular Dynamics Surrogates?” at the Benzon Symposium on Protein structure prediction and design in Copenhagen. |

| Sep 01, 2024 | Started an ELLIS PhD at the University of Copenhagen under the supervision of Ole Winther. |